Terraform EKS Cluster Example

Terraform simplifies the process of provisioning resources by allowing you to define infrastructure as code. When setting up an Elastic Kubernetes Service (EKS) cluster on AWS, Terraform manages the entire workflow, automating resource creation and configuration.

AWS EKS, combined with Terraform, ensures you can deploy highly available Kubernetes clusters in a reliable and automated manner. This article outlines how to create an EKS cluster using Terraform, guiding you through the key steps and considerations.

What Is an EKS Cluster?

Amazon Elastic Kubernetes Service (EKS) is a fully managed platform designed to make it easier to run containerized applications using Kubernetes. Kubernetes is an open-source system that automates the deployment, scaling, and management of containers.

With EKS, AWS handles the operational aspects of running Kubernetes clusters, ensuring that the control plane is distributed across multiple Availability Zones for high availability.

EKS manages the infrastructure behind the scenes, allowing businesses to take advantage of Kubernetes’ powerful orchestration capabilities without needing to maintain the underlying hardware themselves. So, scaling and patching the Kubernetes environment are handled by AWS, making it simpler for you to focus on your applications.

How Do I Create a Cluster in AWS Terraform?

Before we can create the cluster, we need to ensure Terraform is installed and properly configured to communicate with AWS. This section covers the essential steps required to get Terraform ready for deployment, from installation to AWS credentials configuration.

Setting Up Terraform for EKS Cluster Deployment

Terraform can be installed via your package manager or downloaded from its official site. Once installed, configure AWS credentials using the AWS CLI:

aws configureThis command sets up the necessary AWS credentials and region settings, allowing Terraform to interact with your AWS environment.

Next, we’ll initialize Terraform, which prepares the working directory for usage. This is done by running:

terraform initInitialization downloads the provider plugins (in this case, AWS) and configures the working environment for further steps.

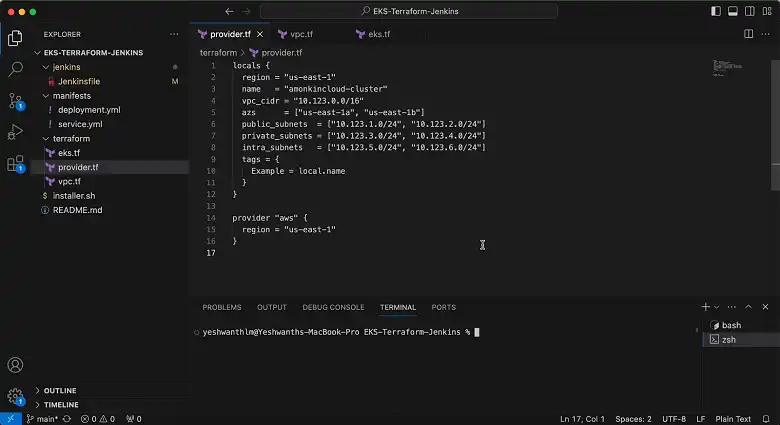

Terraform Configuration for AWS Provider

With Terraform set up, the next step is to configure the AWS provider. This configuration defines the region and credentials Terraform will use to interact with your AWS account.

provider "aws" {

region = "us-west-2"

}Here, we specify the us-west-2 region. You can change this to the AWS region you wish to use. Terraform uses this provider block to authenticate and deploy resources into your chosen region.

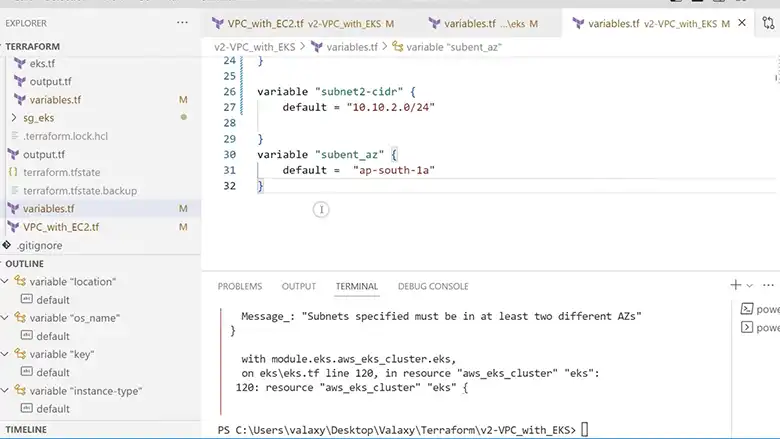

Defining VPC and Subnets for the EKS Cluster

EKS clusters need a network to operate within, so the next step is to define a Virtual Private Cloud (VPC) and its subnets. This provides the infrastructure for your cluster’s communication and operations.

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "3.0"

name = "eks-vpc"

cidr = "10.0.0.0/16"

azs = ["us-west-2a", "us-west-2b"]

private_subnets = ["10.0.1.0/24", "10.0.2.0/24"]

public_subnets = ["10.0.101.0/24", "10.0.102.0/24"]

enable_nat_gateway = true

single_nat_gateway = true

}

This block creates a VPC with private and public subnets across two availability zones. The NAT gateway allows instances in private subnets to connect to the internet while preventing unsolicited inbound traffic. This is crucial for securing your cluster's resources.

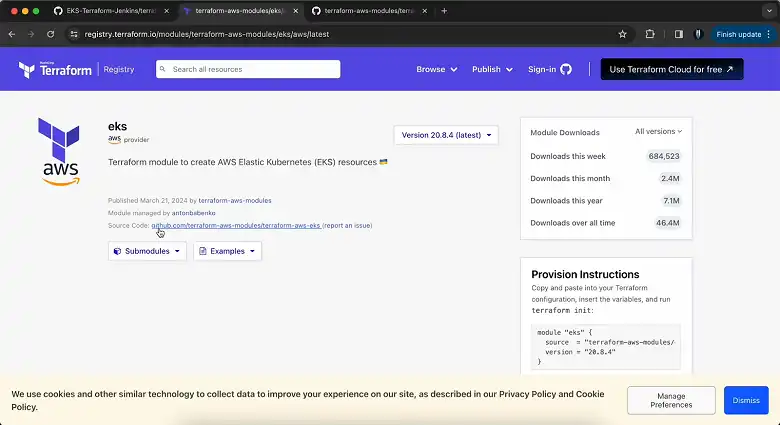

Creating the EKS Cluster and Node Groups

Now that the network is established, we can create the EKS cluster. The cluster acts as the control plane for managing Kubernetes workloads. We’ll also configure the worker node groups that will run the actual applications.

module "eks" {

source = "terraform-aws-modules/eks/aws"

cluster_name = "my-cluster"

cluster_version = "1.21"

subnets = module.vpc.private_subnets

vpc_id = module.vpc.vpc_id

node_groups = {

eks_nodes = {

desired_capacity = 2

max_capacity = 3

min_capacity = 1

instance_type = "t3.medium"

}

}

}In this module, we define an EKS cluster named my-cluster, specifying the Kubernetes version (1.21 in this case) and providing the VPC and subnet details created earlier. The node group defines the type and number of worker nodes, with a minimum of 1 and a maximum of 3 nodes. This ensures scalability and resilience of your application workloads.

Applying the Terraform Configuration

Once all configuration files are defined, apply the Terraform changes to create the infrastructure. This section will guide you through verifying and deploying the configuration using Terraform commands.

terraform planThe plan command previews the changes that Terraform will make, allowing you to verify the configurations before applying them. If everything looks correct, apply the changes by running:

terraform applyThis command deploys the infrastructure as defined, creating the EKS cluster, VPC, subnets, and worker nodes. Terraform will manage the creation of resources, linking everything together seamlessly.

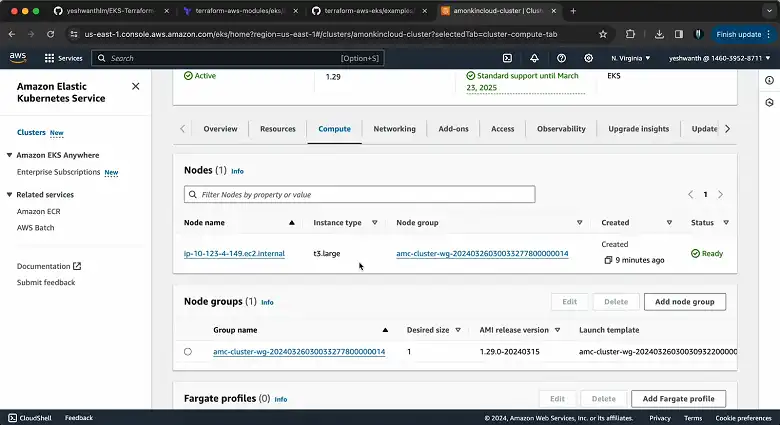

Connecting to the EKS Cluster

After the infrastructure is created, the final step is to connect to your EKS cluster using the kubectl command-line tool. Terraform outputs the necessary configuration to set up kubectl.

aws eks --region us-west-2 update-kubeconfig --name my-clusterThis command configures kubectl to interact with the newly created cluster. Once set up, you can begin deploying workloads on your Kubernetes cluster.

Frequently Asked Questions

How long does an EKS cluster take to create?

Amazon Elastic Kubernetes Service (EKS) has significantly sped up the process of setting up a new Kubernetes cluster. On average, it now takes less than 9 minutes to create the core control plane component of an EKS cluster, which is a 40% improvement from previous times.

How many nodes are in an EKS cluster?

There’s a limit on how many managed node groups you can have in a single EKS cluster. You can create up to 10 groups, and each group can have a maximum of 100 nodes. This means that in total, you can have a maximum of 1,000 managed nodes running on a single EKS cluster.

What is the maximum size of an EKS cluster?

EKS can handle large-scale deployments, but if you plan to go beyond 300 nodes or 5,000 pods, you should have a clear scaling strategy in place. These numbers aren’t exact, but they’re based on our experience working with many EKS users and experts.

Conclusion

In this article, we’ve explored the power of Terraform in automating the creation and management of Amazon EKS clusters. By leveraging Terraform’s infrastructure as code (IaC) approach, we’ve demonstrated how to efficiently provision, configure, and scale Kubernetes environments on AWS.

![Is Databricks Certification Worth It? [Answered]](https://www.iheavy.com/wp-content/uploads/2024/06/Databricks-Certification-768x432.webp)