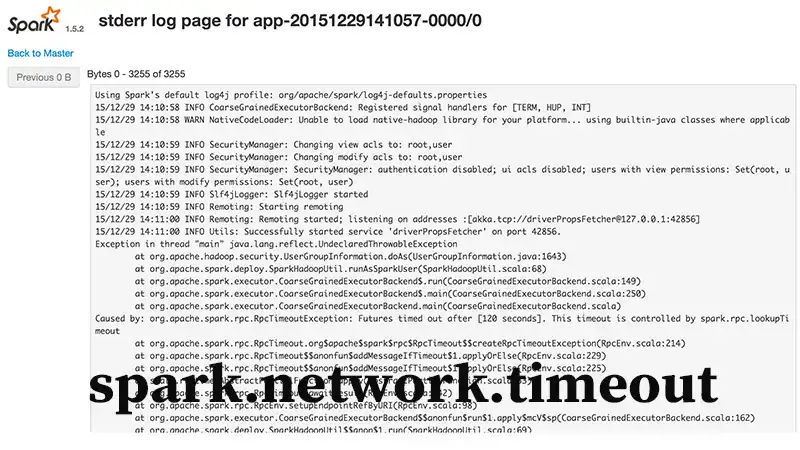

Demystifying `spark.network.timeout` in Apache Spark

In the dynamic realm of Apache Spark, where distributed computing reigns supreme, efficient communication among nodes is pivotal for seamless operation. Enter `spark.network.timeout`, a configuration parameter that wields significant influence in ensuring the harmony of Spark clusters.

Let’s unravel the mysteries surrounding this parameter, breaking down its definition, functionality, and crucial role in maintaining the health of Spark applications.

Defining `spark.network.timeout`

`spark.network.timeout` is a configuration parameter in Apache Spark that dictates the maximum time the system will patiently await the completion of a network request before throwing in the towel. This timeout acts as a safeguard, preventing Spark applications from hanging indefinitely in the face of potential network glitches or disruptions.

The Role It Plays

Imagine a Spark cluster as a team of collaborators, each contributing their computational prowess. Now, if one of these collaborators (a worker node) encounters a hiccup and goes temporarily offline, `spark.network.timeout` steps in as the timekeeper.

It ensures that other workers don’t endlessly linger in limbo, waiting for the absent colleague to return. Instead, it gracefully allows them to move forward after a reasonable timeout period, promoting the overall robustness of the Spark ecosystem.

Why `spark.network.timeout` Matters

1. Preventing Application Hangs

In the absence of a timeout mechanism, a stalled or unresponsive node could potentially bring the entire Spark application to a standstill. `spark.network.timeout` acts as a safety net, preventing such scenarios by setting a reasonable limit on how long the system will wait for a response.

2. Fault Tolerance

Distributed systems are prone to occasional glitches, and network issues are a common culprit. By defining a timeout, Spark enhances its fault tolerance. It ensures that if a node faces prolonged communication challenges, the system can gracefully recover without jeopardizing the entire operation.

3. Customization for Network Latency

Every cluster has its unique characteristics, and network latencies can vary. Setting `spark.network.timeout` allows users to tailor the timeout period to match the expected network latency within their specific environment. This customization ensures optimal performance.

Frequently Asked Question

1. What Is The Default Value Of `Spark.Network.Timeout`?

Ans: The default value is 120 seconds or 2 minutes.

2. How Should I Determine The Right Value For `spark.network.timeout`?

Ans: Start with the default value and consider increasing it based on your cluster’s network characteristics. Ensure it is slightly larger than the expected network latency.

3. What Happens If I Set `spark.network.timeout` too low?

Ans: A too-low timeout might lead to premature decisions about node unresponsiveness, potentially impacting the stability of your Spark application.

4. Why Is It Essential To Monitor The `SparkNetworkBlockTimeout` metric?

Ans: This metric reflects the number of timeouts Spark experiences during network requests. Monitoring it can signal if adjustments to `spark.network.timeout` are needed.

Conclusion

In the intricate dance of distributed computing, where nodes collaborate to execute complex tasks, `spark.network.timeout` emerges as a choreographer, ensuring that the rhythm of communication remains harmonious. By defining the maximum waiting period for network requests, this configuration parameter becomes an invaluable tool for maintaining application health, promoting fault tolerance, and tailoring Spark’s performance to the unique characteristics of each cluster. Understanding and judiciously configuring `spark.network.timeout` is a fundamental step toward orchestrating a symphony of computational prowess in the world of Apache Spark.