How to Run a Python ETL Script in Azure Data Factory | Explained

Azure Data Factory, a robust cloud-based solution, offers a seamless environment for orchestrating these workflows. This step-by-step guide delineates the process of running a Python ETL script within Azure Data Factory, facilitating a structured approach to data transformation and integration.

From setting up the Data Factory instance to configuring activities and defining linked services, each stage is meticulously outlined to empower users in harnessing the full potential of their data ecosystem. Here goes the details.

Step-By-Step Guide To Run ETL Script in Azure Data Factory

Here’s a detailed guide with code snippets to run a python ETL script in azure data factory.

1. Set up Azure Data Factory (ADF)

If you haven’t already, create an Azure Data Factory instance in the Azure portal. Follow the steps to provision a new Data Factory instance.

2. Create an Azure Data Factory Pipeline

Navigate to your Azure Data Factory instance and create a new pipeline. Give it a descriptive name that reflects its purpose, like “Python ETL Pipeline”.

3. Add Activities to the Pipeline

Within your pipeline, add activities to represent the steps of your ETL process. For running a Python script, you’ll use the “Execute Python Script” activity.

{

"name": "Execute Python Script",

"type": "ExecutePythonScript",

"linkedServiceName": {

"referenceName": "<LinkedServiceName>",

"type": "LinkedServiceReference"

},

"typeProperties": {

"scriptPath": "<PathToScript>",

"scriptLinkedService": {

"referenceName": "<LinkedServiceName>",

"type": "LinkedServiceReference"

},

"retryCount": 3,

"retryIntervalInSeconds": 60

}

}4. Configure the Execute Python Script Activity

Configure the Execute Python Script activity with the necessary settings:

- Specify the Python script file or code to execute.

- Define input and output datasets if needed.

- Set up any additional configurations, such as Python environment settings.

{

"scriptPath": "adftestcontainer/testscript.py",

"scriptLinkedService": {

"referenceName": "<LinkedServiceName>",

"type": "LinkedServiceReference"

}

}5. Define Linked Services

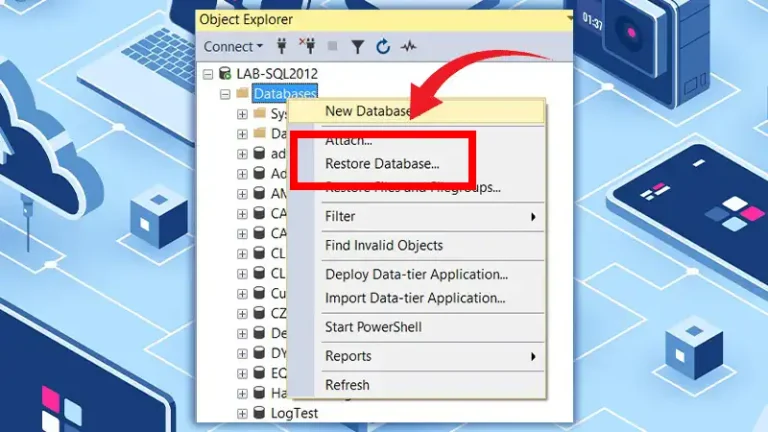

Configure linked services in Azure Data Factory to connect to your data sources and destinations. For instance, if your Python script interacts with Azure Storage or Azure SQL Database, you’ll need to create linked services for these resources.

{

"name": "<LinkedServiceName>",

"type": "AzureBlobStorage",

"typeProperties": {

"connectionString": "<ConnectionString>"

}

}6. Set up Triggers (Optional)

Optionally, set up triggers to automate the execution of your pipeline. Triggers can be based on a schedule, events, or manual activation.

7. Testing

Before deploying your pipeline into production, thoroughly test it to ensure that it behaves as expected. You can use debug mode in Azure Data Factory to test your pipeline.

8. Deployment

Once testing is complete, publish your changes to deploy the pipeline into production.

Frequently Asked Questions

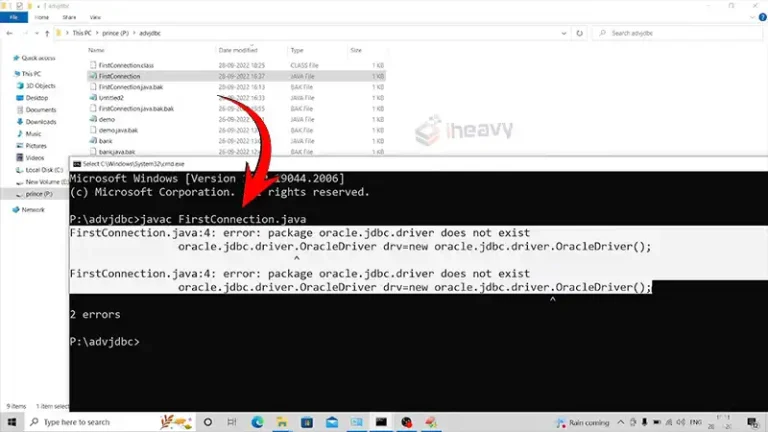

How do I manage Python dependencies for my script in Azure Batch or Databricks?

Azure Batch: Package your dependencies with the script or use a custom Docker image with the necessary dependencies installed.

Azure Databricks: Use Databricks clusters where you can install Python libraries via PyPI using %pip install in your notebook.

Can I schedule my Python ETL scripts using Azure Data Factory?

Yes, ADF provides scheduling capabilities through triggers. You can schedule your pipelines containing Python ETL scripts to run at specified times or intervals.

How do I pass parameters to a Python script running in Azure Databricks from ADF?

You can pass parameters to a Databricks notebook using the ADF pipeline’s parameter feature. Use the dbutils.widgets.get() function in Databricks to retrieve these parameters.

How do I handle error management and retries for Python ETL scripts in ADF?

ADF allows you to configure retry policies for activities. You can set the number of retry attempts and the interval between retries. For error handling, you can implement try-except blocks in your Python scripts and use ADF’s conditional activities to handle errors.

Concluding Remarks

This guide provides a structured approach to setting up and running a Python ETL script in Azure Data Factory, enabling you to efficiently manage your data workflows in the cloud.