Why Cache Memory Is Faster? [Explained and Compared]

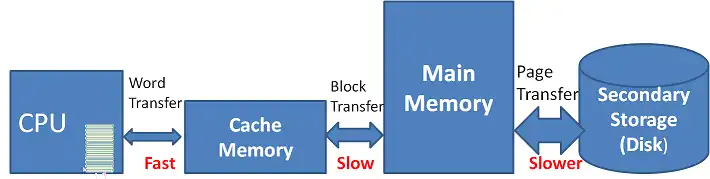

Cache memory is faster than other types of memory due to its proximity to the CPU and its use of high-speed SRAM technology. Positioned close to or on the CPU chip, it minimizes data travel time, enhancing access speeds. Its small size, hierarchical levels (L1, L2, L3), and techniques like associativity, prefetching, and write-back policies optimize data retrieval and reduce latency.

Features such as pipelining and parallelism further boost throughput, making cache memory crucial for improving overall system performance by quickly delivering data to the CPU. We can go deeper, let’s scroll.

Key Factors That Propel Cache Ahead of RAM

Cache memory is faster than main memory (RAM) because of several key factors. These factors help in improving the overall performance of a computer system by reducing the time the CPU spends waiting for data.

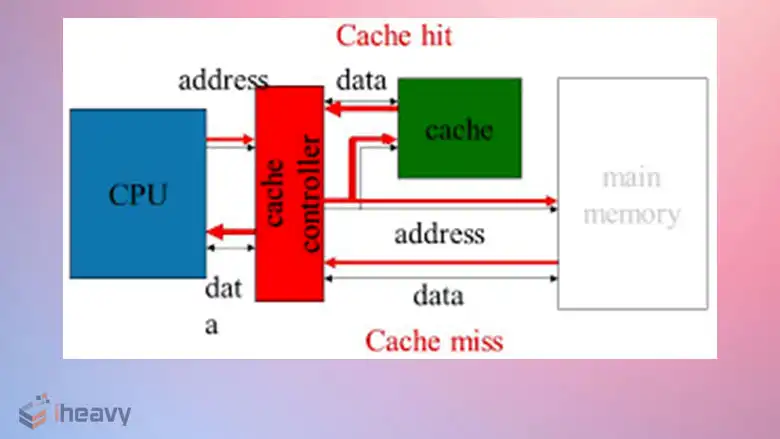

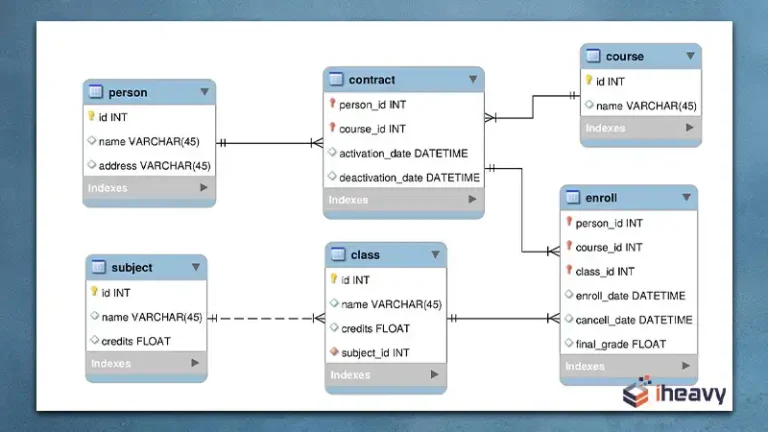

Fig: Cache memory is faster.

- Proximity to the CPU:

Cache memory is located much closer to the CPU compared to main memory. There are usually multiple levels of cache (L1, L2, and sometimes L3), with L1 being the closest and fastest. This proximity reduces the time it takes for data to travel between the CPU and the cache.

- Faster Access Time:

Cache memory is made from SRAM (Static Random Access Memory), which is faster than the DRAM (Dynamic Random Access Memory) used in main memory. SRAM doesn’t need to be refreshed like DRAM, allowing for quicker data retrieval.

- Smaller Size:

Cache memory is much smaller in size compared to main memory. The smaller size allows it to be accessed more quickly and efficiently, as there is less data to search through.

- Designed for Speed:

Cache is specifically designed to store frequently accessed data and instructions. It uses advanced techniques, such as associativity and prefetching, to quickly predict and retrieve the data the CPU will need next.

- Reduced Latency:

Because cache memory is smaller and faster, it has lower latency, meaning it can deliver data to the CPU with minimal delay.

Architectural Features To Ponder Upon For Tech Nerds

The internal architecture of cache memory is designed to maximize speed and efficiency. Here are some key architectural features that contribute to its speed:

1. Hierarchical Organization:

Multi-level Cache (L1, L2, L3): Modern processors use multiple levels of cache, each with different sizes and speeds. L1 is the smallest and fastest, while L3 is larger and slower. This hierarchy ensures that the most frequently accessed data is stored in the fastest cache level.

2. Associativity:

- Direct-Mapped Cache:

Each block of main memory maps to a specific cache line. It’s simple but can cause conflicts if multiple addresses map to the same line.

- Set-Associative Cache:

Combines features of direct-mapped and fully associative caches. It allows multiple blocks of data to be stored in the same set, reducing conflicts.

- Fully Associative Cache:

Any block can be stored in any cache line, minimizing conflicts but requiring more complex hardware for lookup.

3. Tag and Indexing Mechanism:

Each cache line has a tag that identifies the specific block of memory it stores. The index helps locate the set in set-associative caches. This mechanism speeds up the search process.

4. Replacement Policies:

- Least Recently Used (LRU):

Replaces the least recently accessed data, which is likely not needed soon. This policy helps keep the most relevant data in the cache.

- Random Replacement:

Chooses a cache line to replace at random, which is simpler to implement but less efficient.

5. Write Policies:

- Write-Through:

Data is written to both the cache and main memory simultaneously, ensuring data consistency but with a higher write latency.

- Write-Back:

Data is written only to the cache and updated to main memory later, which reduces write latency and increases cache performance.

6. Prefetching:

The cache can prefetch data that is likely to be needed soon based on access patterns. This anticipatory loading reduces waiting times for the CPU.

7. Line Size:

Cache lines (blocks) contain a set amount of data. Larger lines can transfer more data at once but may cause more conflicts, while smaller lines are more granular. The size is chosen to balance speed and efficiency.

8. Pipelining and Parallelism:

The cache architecture often supports pipelining and parallel access to different cache lines or levels, allowing multiple operations to occur simultaneously, increasing throughput.

How Fast Cache Memories Really Are?

Here’s a comparison table highlighting the typical speeds of cache memory versus other types of memory in a computer system:

| Memory Type | Speed (Access Time) | Description | |

| Caches | L1 Cache | 1-3 ns | Closest to the CPU, smallest size, very fast, often divided into instruction and data caches. |

| L2 Cache | 3-10 ns | Larger than L1, slightly slower, but still faster than main memory. Often shared between cores. | |

| L3 Cache | 10-30 ns | Largest and slowest cache, shared across all cores in a CPU. Still much faster than RAM. | |

| Others | Main Memory (DRAM) | 50-100 ns | Much larger than cache, but significantly slower. Used for active data storage. |

| SSD (Solid State Drive) | 50-100 µs | Non-volatile storage, significantly slower than RAM and cache, but faster than HDDs. | |

| HDD (Hard Disk Drive) | 5-10 ms | Magnetic storage, slowest in terms of data access, used for long-term storage. |

Frequently Asked Questions

- What is the significance of SRAM in cache memory speed?

SRAM (Static RAM) used in cache memory is faster than DRAM because it does not require refreshing and has shorter access times. SRAM’s architecture allows for rapid access to stored data.

- How does cache associativity impact its speed?

Cache associativity, which includes direct-mapped, set-associative, and fully associative caches, helps reduce conflicts and increases the likelihood of cache hits. Higher associativity allows data to be found more quickly, improving speed.

- What is a cache hit and how does it relate to cache speed?

A cache hit occurs when the data requested by the CPU is found in the cache. High cache hit rates mean the CPU can access data quickly without going to the slower main memory, thus improving overall speed.

Concluding Remarks

Cache memory acts like a high-speed express lane in computing, swiftly connecting the CPU to frequently accessed data and bypassing the slower paths of main memory. Positioned close to the CPU and using ultra-fast SRAM, it significantly speeds up data retrieval, ensuring that processing runs smoothly and efficiently without delays.